Remember the days when “AI” in healthcare meant a sci-fi movie plot? Now, it’s a reality in our clinics, hospitals, and even our pockets. From analyzing X-rays in seconds to predicting patient needs, artificial intelligence is reshaping everything. But with great power comes a lot of questions. Who’s in charge of these tools? What happens if they make a mistake? How will they change the way you, a healthcare professional, work every day?

The U.S. government has been asking the same questions. In 2026, a new wave of policies has been enacted to put guardrails on this technology. This isn’t just a bunch of legal jargon; it’s a playbook that will fundamentally change how AI is developed, used, and paid for in the healthcare world. The old rules are out, and a new era of “adaptive oversight” is here.

This article will break down what these new policy changes mean for you, whether you’re a doctor, a nurse, an administrator, or simply someone who cares about the future of patient care.

From Wild West to Structured Framework: A Look at the Old and New

Just a few years ago, AI in healthcare was like the Wild West. Innovators were creating incredible tools at breakneck speed, but regulators were playing catch-up. You might have heard of some of the high-profile case studies—both the successes and the cautionary tales.

- IBM watson for Oncology was once seen as a revolution in cancer care but ultimately delivered unsafe advice and was shut down after burning through billions in funding.

- GE healthcare set the bar for success with over 58 FDA-cleared AI tools for radiology that have sped up workflows and improved diagnostic accuracy.

- Viz.ai became a gold standard by using AI to rapidly analyze brain scans, cutting stroke triage times, and securing reimbursement approval.

But for every Viz.ai, there have been plenty of setbacks—AI tools that overpromised, misdiagnosed, or failed to win clinical trust. To address these mounting issues, the U.S. government has now moved to a new ‘lifecycle-based’ regulatory model. The shift is away from heavy federal restrictions and toward a more varied system, where states are creating their own rules.

For example, by mid-2025, over 250 healthcare AI bills had been introduced across more than 34 states, with laws like Utah’s AI Policy Act requiring disclosure of AI use and California’s laws regulating generative AI in insurance claims. The Colorado AI Act, for instance, now mandates disclosure and opt-out mechanisms for AI use in healthcare, reinforcing the trend that states are taking the lead in patient protection.

This means the overall system is becoming less controlled at the national level but more varied at the state level, creating both opportunities and risks for everyone involved.

The Three-Headed AI Regulatory Sentinel: FDA, CMS, and HHS

In 2026, the AI policy changes aren’t just coming from one place; they are a collaborative effort from three major federal agencies: the FDA, CMS, and HHS. This collaboration is part of a larger federal AI action plan grounded in three key pillars: accelerating innovation, building AI infrastructure, and strengthening international leadership. Let’s see it this way:

- FDA (Food and drug administration): The FDA is the bouncer at the club, making sure that the AI tools themselves are safe and effective.

- CMS (Centers for medicare & medicaid services): CMS is the accountant, deciding how these tools get paid for.

- HHS (Department of health and human services): HHS is the overarching manager, setting the national safety standards and ensuring everyone is on the same page.

Let’s break down the key policy shifts from each agency.

The FDA’s new philosophy: adaptive oversight

The biggest change from the FDA is a shift from one-time approval to a continuous, adaptive oversight model. In the past, a new medical device would get FDA approval, and that was it. But AI is different; it’s always learning and changing.

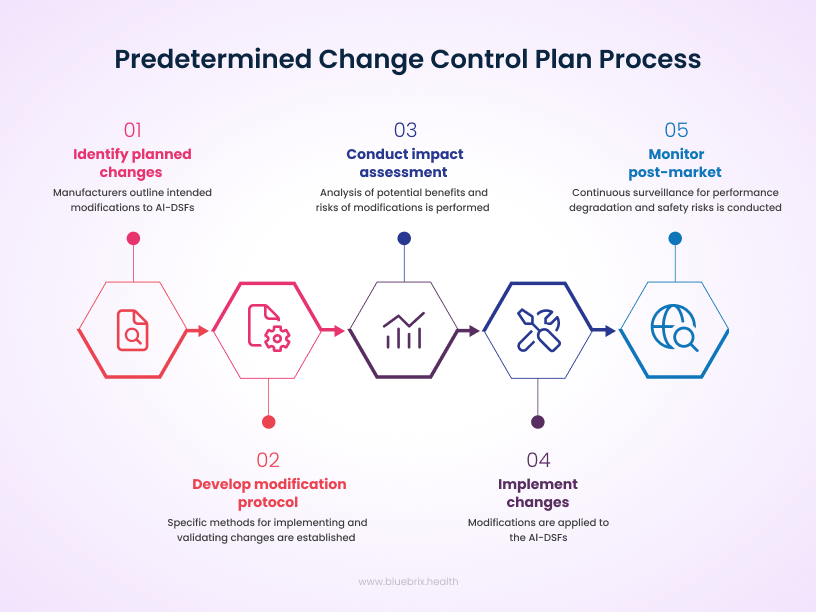

Now, the FDA is introducing the Predetermined change control plan (PCCP). This is a game-changer. It means that an AI developer can get pre-approval for future, planned changes to their algorithm. This allows the tool to evolve and improve without having to go through the lengthy and expensive re-approval process every time.

So, what actually falls under the FDA’s radar in this new model? The short answer: any AI tool that acts as a medical device—especially if clinicians depend on it to diagnose, treat, prevent, or manage a disease. If a doctor is going to use an AI’s output to make a real-world decision about patient care, the FDA wants a say.

Categories that require FDA approval

- AI-enabled medical devices: Think software that reads X-rays, monitors heart rhythms, or analyzes patterns in pathology slides. If it processes or interprets clinical data, it’s in FDA territory.

- Clinical decision support (CDS) software: When an AI tool goes beyond just displaying data and starts offering patient-specific recommendations—particularly if the logic behind the advice isn’t independently reviewable—that’s when FDA review is triggered.

- Software as a medical device (SaMD): Pure software solutions built for medical purposes—like monitoring, diagnosing, or treatment planning—are squarely regulated.

- AI embedded in medical hardware: Devices such as AI-powered ECG machines or smart ultrasound systems that include automated analysis functions also fall under approval requirements.

What’s generally exempt

Not every health-related AI needs to run the FDA gauntlet. Low-risk, general wellness apps—like fitness trackers or meditation guides—usually get lighter oversight. Similarly, tools that simply organize or display medical information without making actionable recommendations often fall under exemptions (thanks to the 21st Century Cures Act).

The FDA’s approval criteria

When deciding if an AI tool makes the cut, the FDA looks at:

- Intended use and risk classification—how much clinical weight the tool carries.

- Safety, effectiveness, and validation—whether the algorithm is proven in real-world settings.

- Transparency and auditability—can the recommendations be explained and reviewed if challenged?

Examples in action

- Imaging AI used in radiology or pathology.

- AI-driven diagnostics for ECG or retinal scans.

- Therapeutic recommendation engines that directly influence treatment decisions.

- Wearable devices with embedded AI for continuous health monitoring.

The bottom line: if an AI tool directly influences clinical judgment or patient management, it needs FDA approval. If it’s only supporting wellness, admin tasks, or non-critical functions, it generally won’t. This ensures that the AI remains effective and safe in real-world settings and that any issues, like bias or inaccuracies, are caught and corrected quickly.

CMS: the money talks

The CMS policy changes are all about aligning reimbursement with the new reality of AI-powered care. Here’s what you need to know:

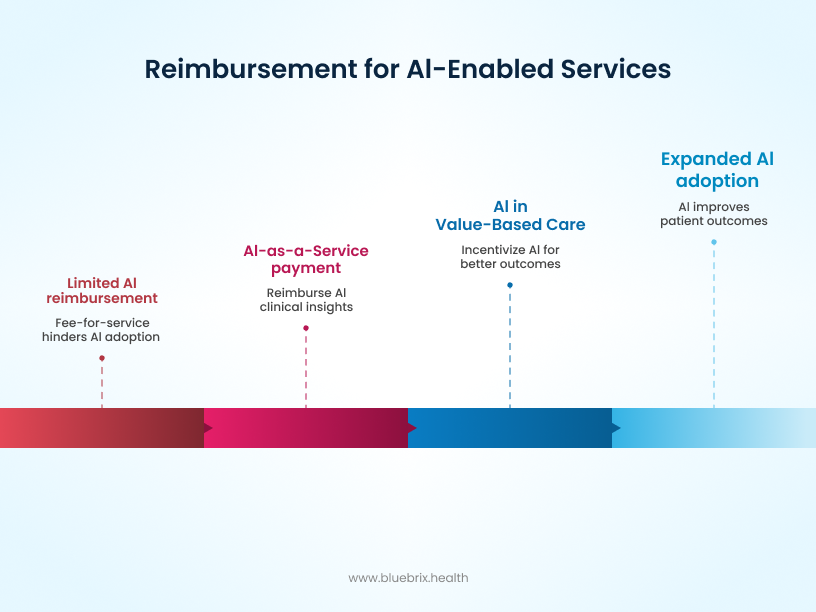

- 2026 Medicare physician fee schedule: The new schedule aims to offer better reimbursement for services that leverage AI, especially those that improve efficiency and patient outcomes. This is a clear signal to providers that using compliant AI tools will be financially beneficial, encouraging adoption across the board.

- “AI as a service”: This new classification recognizes that AI isn’t just a one-time product; it’s a continuous service. This allows for a more flexible reimbursement model that better reflects the value of ongoing AI support, such as continuous data analysis and patient monitoring.

- Value-based care alignment: The new policies also align with the shift toward value-based care, where providers are rewarded for patient outcomes rather than the volume of services. AI, which can help predict risks and personalize treatment, is a perfect fit for this model, and CMS’s new rules will reward providers who use it effectively to improve patient health. Notably, new mandates now require that individual patient circumstances must be considered in prior authorization and coverage determinations, rather than solely relying on AI-generated results.

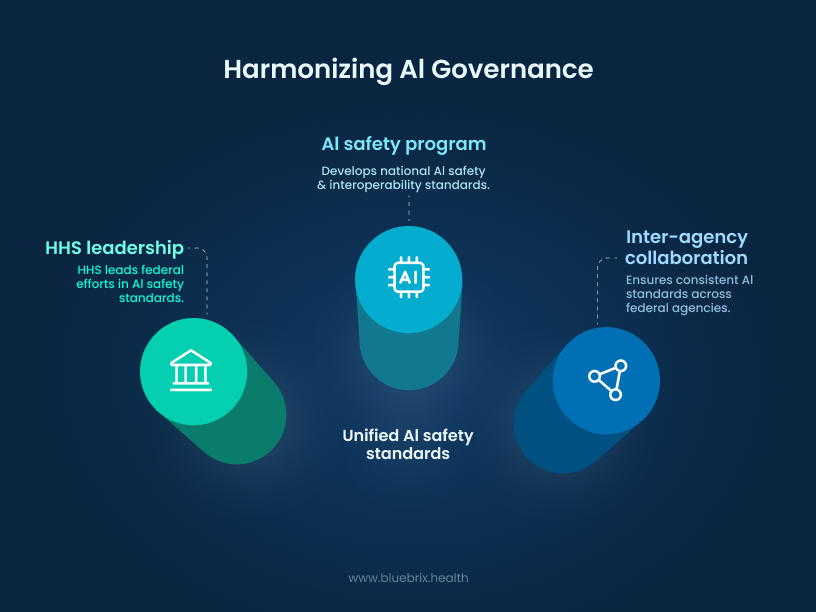

HHS: setting the national standards

HHS is the final piece of the puzzle, focused on creating a cohesive national strategy for AI governance. Key initiatives include:

- AI Safety Program: This program establishes national safety standards for the development and use of AI in healthcare. It’s about ensuring that AI tools are not only effective but also ethical, fair, and secure. In this new landscape, certified EHR vendors must also disclose and mitigate risks associated with AI-based decision support systems.

- Inter-Agency Collaboration: The new policies emphasize that the FDA, CMS, and other agencies will be working together more closely than ever. This coordination is crucial for avoiding confusing or contradictory rules and ensuring a smooth, consistent experience for both AI developers and healthcare providers.

The State Patchwork: Compliance in Motion

Federal guardrails may be tightening, but the real action is happening at the state level. By 2025, over 250 bills were introduced across more than 34 states, and now in 2026 we’re looking at a patchwork of obligations that every healthcare AI deployer needs to track. What’s emerging is less of a single national rulebook and more of a state-by-state compliance map—one that providers, developers, and administrators can’t afford to ignore.

Here’s a snapshot of the states leading the charge:

- Colorado has taken the toughest stance with its AI Act, requiring disclosure whenever AI is used in high-risk decisions, annual impact assessments, anti-bias controls, and record-keeping for at least three years. Enforcement kicks in June 30, 2026, after a delayed rollout—giving hospitals and vendors a short runway to build documentation systems and appeals processes.

- Utah was one of the first movers, with laws already in force since May 2025. Healthcare is squarely in scope: AI use must be disclosed upfront in regulated sectors, and failure to comply can cost $2,500 per violation. For providers, that means updating frontline scripts, training staff, and flagging regulated use cases before they reach patients.

- Texas passed one of the most sweeping laws, requiring plain-language disclosure in any AI-influenced “high-risk” scenario, from healthcare to hiring. Providers must document system intent, monitor risks, and ban manipulative or biased uses. Enforcement starts January 1, 2026, so now is the time to engage legal teams and audit every AI touchpoint in clinical workflows.

- California is zeroing in on transparency. Its new rules demand that generative AI developers disclose training data sources, apply watermarking, and issue disclaimers when AI is used in health communications. Effective January 2026, this could change how health systems handle everything from patient outreach campaigns to AI-driven insurance communications.

- Watchlist states like New York, Illinois, Maryland, Massachusetts, and Virginia are not far behind. Draft bills are circulating to regulate AI in health insurance utilization management, mandate state reporting, and impose disclosure requirements. Enforcement is expected between 2026 and 2027, making legislative monitoring a must-do task for compliance teams.

For healthcare leaders, this means one thing: compliance is no longer a federal-only conversation. Hospitals, payers, and AI vendors need to build state-level playbooks, run regular audits, and keep a live watch on legislative trackers. In this new era, being proactive on compliance isn’t just about avoiding penalties—it’s about keeping patient trust intact.

HIPAA & State Privacy: The New Governance Checklist

If state laws are the outer ring of AI regulation, HIPAA remains the core. The Privacy and Security Rules still govern how Protected Health Information (PHI) is collected, stored, and used—but in 2026, the stakes are higher because AI systems are touching that data in new ways. Whether it’s a model transcribing physician notes, an algorithm predicting readmissions, or a vendor training AI on de-identified records, privacy officers and procurement teams now carry a heavier load.

When HIPAA applies

Anytime PHI is created, received, maintained, or transmitted by a covered entity (or its business associate), HIPAA rules apply. AI doesn’t change that. If an algorithm processes PHI for clinical, operational, or analytics purposes—whether during training, deployment, or integration—it must be HIPAA-compliant. Only when data is fully de-identified under HIPAA standards does it step outside of regulation.

De-identification: safe harbor vs. expert determination

HIPAA allows two pathways for removing identifiers from data:

- Safe harbor: Strip out all 18 identifiers (names, dates, addresses smaller than state level, phone numbers, SSNs, etc.).

- Expert determination: Bring in a statistician or privacy expert to certify that re-identification risk is very low.

Both methods must be documented and revisited over time, especially as datasets get mixed, repurposed, or enriched with external data.

BAAs for MLOps and AI vendors

Business Associate Agreements (BAAs) are no longer just for traditional EHR or billing vendors. If an AI platform, MLOps tool, or model developer touches PHI—or even indirectly influences how it’s stored or secured—a BAA should be on file. These agreements must spell out breach notification timelines, limits on data use, encryption and access controls, and liability terms in the event of a breach.

Retention and minimization rules

Under HIPAA, policies and authorizations tied to PHI must be retained for six years, but some states extend this further (seven to ten years, especially for pediatric records). On top of that, the minimum necessary rule is still in force: AI workflows should only access the PHI needed to perform their function, nothing more. At the end of retention, secure disposal—data wiping, shredding, or certified destruction—is mandatory.

Consent in the AI era

Transparency isn’t optional anymore. Providers using AI-powered tools in clinical interactions should disclose their use and obtain explicit patient consent. For example:

“To provide you with the best care, I’m using an AI tool that helps with note-taking and analysis. Your information will remain private, and you can opt out at any time. Do I have your permission to use this tool during our visit?”

States like California and Washington now demand detailed, written consents for AI tools that process health data. This means consent forms should outline the purpose of the tool, what data it collects, security protections, opt-out rights, and review processes.

Data residency: The emerging battleground

HIPAA requires vendors to document where data lives, encrypt it in transit and at rest, and log access. But states are now layering stricter demands:

- California, Washington, and New York are pushing rules to keep PHI within state borders unless explicit patient consent is given for transfer.

- Cloud and AI procurement contracts now need to specify data center locations, audit rights, and breach notification protocols.

- Health systems with international ties must account for GDPR, LGPD, and other localization statutes, adding another layer of complexity.

In general, HIPAA still sets the floor, but states are rapidly raising the ceiling. For healthcare organizations, compliance now means layering federal rules with state-specific privacy demands, rewriting procurement contracts, and training clinicians on patient-facing consent.

Beyond Certification: What EHRs Must Prove in 2026

As privacy and compliance tighten, certified EHR vendors are facing their own wave of mandates. The Office of the National Coordinator for Health IT (ONC) is no longer just nudging vendors toward interoperability—it’s demanding it. By 2026 and beyond, certified systems must prove they can safely integrate AI, disclose algorithmic risks, and deliver seamless FHIR/SMART-based data exchange.

In practice, that means EHR vendors aren’t just building features—they’re being held accountable for how those features perform in the wild. Transparent reporting, continuous interoperability testing, and error-proof AI deployment have become non-negotiable pillars.

ONC/FHIR expectations for 2026

Under ONC’s HTI-1 final rule and forthcoming HTI-2 proposals, the baseline for certification is rising:

- Native FHIR R4 API support is mandatory, with updates aligned to the US Core Implementation Guide.

- SMART on FHIR must be enabled for patient-facing apps and third-party integrations—no manual roadblocks allowed.

- By July 2026, EHRs must expose real-time access to patient data, including medications, labs, and conditions.

- Starting 2027, vendors will submit annual interoperability reports, covering FHIR usage, bulk data exports, and immunization data submissions.

Vendor obligations in detail

Certified EHR vendors now need to check more boxes than ever:

- Structured reporting: Beginning in 2026, interoperability metrics and patient access data must be tracked and shared at the product level.

- AI disclosure: Vendors must identify where AI is embedded, how it works, and what risks it carries—including bias, governance controls, and mitigation strategies.

- Network participation: Systems are expected to integrate with CMS-aligned networks to eliminate silos and smooth patient transitions across providers.

AI risk and interface risk disclosure

AI is only as safe as the system it lives in. ONC now requires vendors to publish documentation detailing:

- The role of any AI models in decision support.

- Sources of potential bias and limits of explainability.

- Error handling strategies for integration risks (e.g., mismatched data mappings or version conflicts in FHIR endpoints).

This isn’t just about compliance—it’s about equipping healthcare providers to understand when and how AI can be trusted.

Raising the bar on integration testing

Gone are the days when an EHR vendor could run a demo and call it a day. ONC now expects rigorous, documented testing of every integration layer, including:

- Authentication and security: SMART on FHIR app registration, OAuth2 authentication, and token refresh under real-world conditions.

- Edge cases: Multiple patient IDs, unusual medication profiles, and incomplete or missing data fields.

- Load and resilience: Stress testing systems under simultaneous requests across multiple production and sandbox environments.

- Error handling: Standardized fallbacks for unsupported operations, structured logging of failed API calls, and regression testing after every upgrade.

How we’re preparing at blueBriX

At blueBriX, we’ve been building with this new reality in mind long before this was even made official. Interoperability, AI transparency, and regulatory agility aren’t afterthoughts for us—they’re baked into how our platform is designed.

Interoperability as a foundation

blueBriX platform was built to connect. With native support for integration across specialties, multilingual environments, and a library of pre-configured APIs, we’re already aligned with ONC’s FHIR R4 and SMART on FHIR expectations. That means real-time patient data exchange is practical and scalable for providers who need information without barriers.

Transparent AI, designed for trust

AI will only succeed in healthcare if clinicians trust it. That’s why our AI Agents are developed with clear governance controls, explainability in outputs, and the flexibility to embed risk documentation as federal and state policies demand. Our goal is simple: keep clinicians in charge while giving them the tools to make faster, better-informed decisions.

Building for agility

Regulation is moving quickly, but our DevOps and no-code architecture means we can move just as fast. Continuous integration, automated testing, and modular deployment allow us to adapt seamlessly to new ONC reporting requirements, whether it’s interoperability metrics or AI risk transparency.

Ready for value-based care

Finally, we know that compliance isn’t the finish line—it’s the foundation. Our analytics and predictive care modules are designed to support value-based care, making it easier for providers to align with CMS-aligned networks and outcome-focused models.

Clinical Validation: Proving AI Works in the Real World

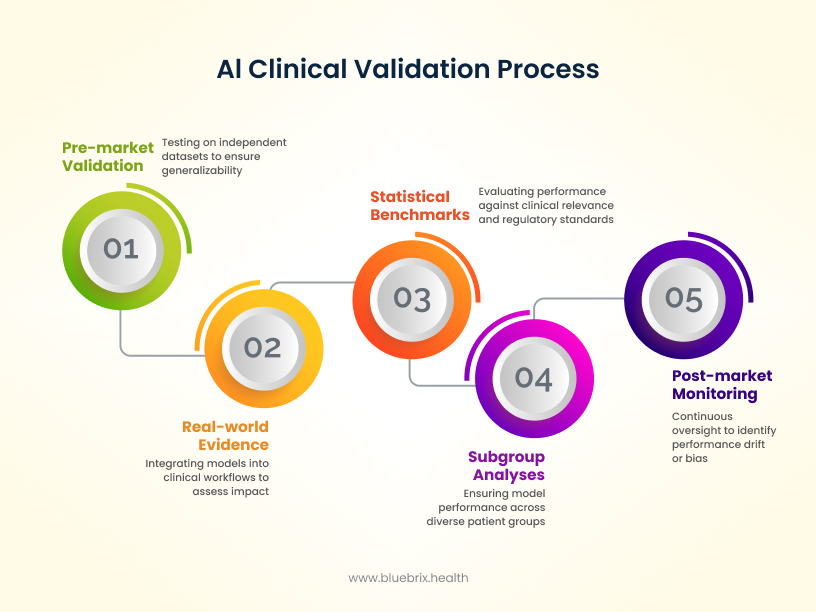

AI in healthcare doesn’t get a free pass just because it’s innovative. Instead, it has to prove itself. Before reaching the market, algorithms are expected to show strong performance through external validation on independent datasets, so results aren’t skewed by the development environment. They also need subgroup analyses to make sure the model works consistently across patient populations—whether by age, race, or sex—without introducing new bias. Increasingly, regulators are encouraging pragmatic and adaptive trials, where AI tools are tested in conditions that mirror everyday practice. This ensures validation isn’t just theoretical but grounded in how clinicians and patients actually experience care.

But validation doesn’t end once a product is cleared. The real test begins post-market, where models must be continuously monitored in real-world clinical workflows. Evidence from prospective deployments—whether in hospital EHRs or bedside decision support—helps confirm that AI is not only accurate but also safe and effective in practice. Importantly, Predetermined Change Control Plans (PCCPs) provide a structured way to trigger retraining whenever performance drifts, safety signals emerge, or new population data reveals bias. Instead of waiting for failures, AI oversight is shifting toward continuous improvement, with retraining, recalibration, and threshold adjustments built in as part of the lifecycle.

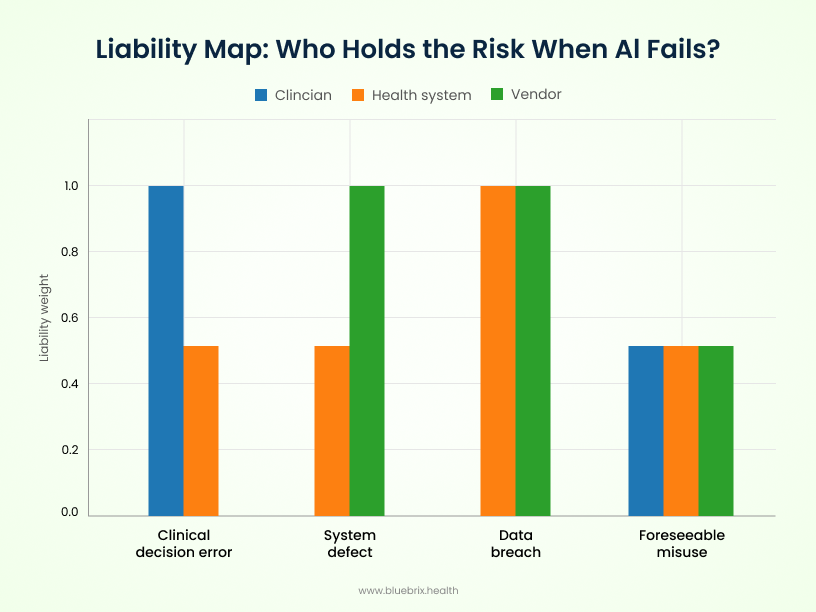

The Liability Map: Who Holds the Risk When AI Fails?

Even with new oversight models, there’s no escaping the toughest question: when an AI tool causes harm, who is on the hook? The answer depends on the type of failure — and the lines aren’t always clean.

- Clinicians carry liability if they misuse or over-rely on AI outputs, especially when those actions breach the accepted standard of care. Following a flawed recommendation without applying clinical judgment could open the door to malpractice claims.

- Health systems face exposure when institutional failures occur — like poor oversight, inadequate training, or weak vendor management. If a hospital deploys an unsafe tool without proper integration or monitoring, it may share liability for downstream harm.

- AI vendors are typically liable for defects in the system itself: software bugs, safety flaws, or misrepresentations about capabilities. Vendors can’t contract away responsibility for personal injury, and non-compliance with FDA or HIPAA rules only strengthens the case against them.

Contractual guardrails: what to lock in

To protect themselves, health systems are rewriting contracts with sharper AI-specific clauses:

- Indemnity tailored by use case — covering regulatory fines, third-party claims, and breaches.

- Data breach SLAs — clear notification timelines, patient communication support, and remediation responsibilities.

- Regulatory cooperation clauses — obligating vendors to support audits and adapt to new federal and state rules.

- Audit rights — giving health systems authority to review vendor compliance and performance.

- BAA integration — ensuring HIPAA-compliant handling of PHI with explicit safeguards.

- Change management triggers — requiring renegotiation if the AI system undergoes material updates or is repurposed.

The liability landscape is evolving just as fast as the technology itself. Without clear contracts, updated insurance, and ongoing risk assessment, hospitals and vendors risk being blindsided when AI doesn’t perform as promised. For AI to succeed in healthcare, every stakeholder needs not just a compliance plan, but a liability playbook.

On the frontlines: what clinicians will experience

So, how will all this impact your daily life on the front lines of patient care?

- Smarter clinical workflows: You will likely see AI tools integrated into your existing systems, from EHRs to diagnostic machines. These tools will handle routine tasks like medical documentation, freeing you up to spend more time with patients. For example, AI can help with transcribing notes and summarizing reports, which could help reduce administrative burden.

- Better-informed decisions: AI will act as a powerful co-pilot, providing you with real-time data analysis and insights to help you make more accurate and timely diagnoses. It’s like having a second opinion based on a massive database of information.

- The human element remains king: This is the most important takeaway. The new policies are designed to ensure that AI does not replace you, but rather augments your skills. AI will handle the “rote, repetitive, and real-time” tasks, while you remain the “arbiter of judgment, empathy, and accountability”. The trust in AI ultimately depends on the trust in clinicians.

At the Bedside: What Patients Can Expect

For patients, the changes are aimed at creating a more personalized, equitable, and safe healthcare experience.

- Personalized care: AI can analyze a patient’s genetics and health history to create highly personalized treatment plans. This precision medicine approach can lead to better outcomes and fewer side effects.

- Improved access and equity: AI has the potential to help bridge the gap for the 4.5 billion people who lack access to essential healthcare services. For instance, AI could help in triaging patients and detecting early signs of diseases.

- Transparent use: New regulations require transparent consent and patient education around the use of AI in care. For example, new privacy laws like the Texas AI Policy Act (HB 149) and a growing number of similar state laws require explicit patient consent for data use and clear “nutrition-label”-like disclosures about how an AI model makes its decisions. This ensures patients understand how their data is being used and can make informed decisions about their care.